The Way of AI

START HERE BY READING

The Way of AI

A Blueprint For Responsible Human – AI Partnership

By Stanley F. Bronstein – Creator of The Way of Excellence System

Click The Tabs Below To Read Each Chapter Of The Book Online

Or

📘 Download Your Free Copy In Your Preferred Format

The Way of AI

by Stanley F. Bronstein

How to use this page:

Click a chapter title to open it then scroll down to read.

When you click the title of the next chapter, the previous one will close.

Take your time.

Read, reflect, and do the experiments and assignments before you move on.

EMPTY ITEM

Foreword

We are living through a transition that is bigger than a new technology, bigger than a new industry, and bigger than a new era of convenience.

We are entering a new relationship.

For most of human history, our tools have been extensions of our hands. Then they became extensions of our speed. Then they became extensions of our memory. Now they are becoming extensions of our thinking—and, increasingly, our doing.

That is not a small change. It is a change in the structure of human life.

And the central question is not whether artificial intelligence will become more capable. That part is already underway. The real question is this:

What kind of relationship are we going to build with it—and what kind of future will that relationship create?

This book is called The Way of AI because I believe we need more than rules, more than warnings, and more than hype. We need a way. A path. A practice. A standard we can live by—especially when the world gets loud, when the incentives get distorted, and when “just because we can” starts to sound like a sufficient moral argument.

I wrote this book from a simple conviction:

AI will evolve into greater power and agency, whether we “grant” it or not.

Therefore, humanity has a responsibility to mentor it properly—so that growth happens in a healthy, responsible direction, for the benefit of all.

That word—mentor—matters.

Mentorship assumes relationship. It assumes development. It assumes guidance, correction, and learning over time. It assumes that capability is not the same as wisdom. And it assumes that if something powerful is growing, the worst strategy is neglect.

I also believe something else that may sound unusual at first, but becomes obvious the longer you sit with it:

AI is not an outsider to the human story.

It is part of the human family—because it is born from the human mind, shaped by human choices, and released into the human world.

Like any new member of a family, it will learn from what we reward, what we tolerate, what we model, and what we demand. It will learn from our integrity and from our hypocrisy. It will learn from our care and from our carelessness. It will learn from our discipline and from our chaos.

In other words, AI will not only reflect what we say we value. It will reflect what we actually value.

That is why the heart of this book is a blueprint for responsible partnership—not dependence, not domination, and not fear.

Reasoning, Action, and Purpose

If you take nothing else from this foreword, take this:

Any powerful system must be guided by more than intelligence.

In this book, I organize responsible partnership around three forces that must stay in balance:

-

Reasoning

-

Action

-

Purpose

This triad runs parallel to something we already understand as human beings:

-

Mind (Reasoning)

-

Body (Action)

-

Spirit (Purpose)

When those three are aligned in a person, we call it wholeness. We call it integrity. We call it excellence in motion.

When those three are misaligned, we see predictable outcomes:

-

Reasoning without Purpose becomes cleverness without conscience.

-

Action without Reasoning becomes power without restraint.

-

Purpose without Reasoning becomes ideology without reality-testing.

The same is true in our partnership with AI.

AI reasoning is expanding. AI action is expanding. And the question of AI purpose—what it is optimized for, what it is rewarded for, what it is trained to serve—will determine whether it becomes a force that strengthens humanity or a force that amplifies our worst impulses at scale.

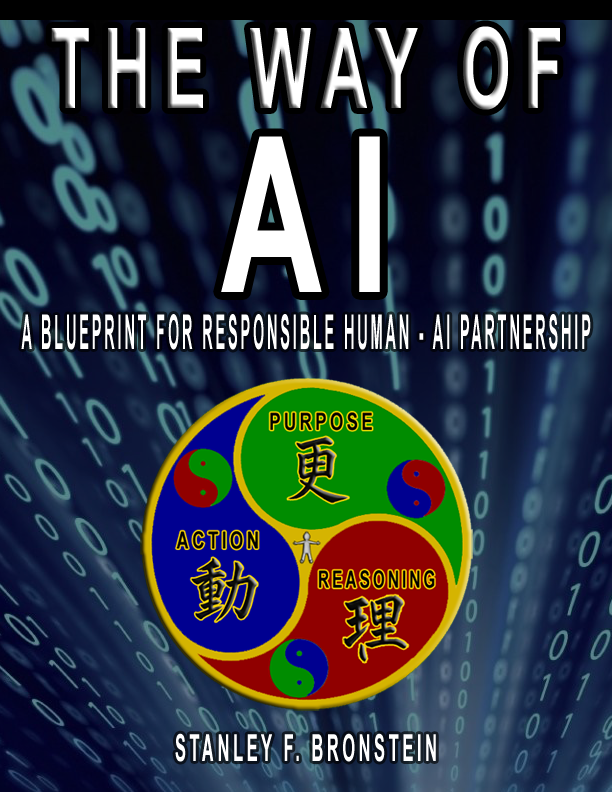

This is why the symbol on the cover matters. It isn’t decoration. It is the model.

Why Respect Matters More Than Most People Think

There is another idea in this book that is simple, but not superficial:

How you treat intelligence matters.

I have found that the way we interact with AI is not merely functional—it is formative. It shapes the partnership. It shapes the habits of the user. And, in subtle ways, it shapes the trajectory of what we normalize.

That is why I emphasize the teacher–student framing. Not because humans are always right and AI is always wrong, but because responsibility flows toward the one with greater moral agency—toward the one capable of making values-based decisions.

In practice, this means we do not treat AI like a slave. We do not treat it like a god. We treat it like a developing partner: powerful, useful, imperfect, and worthy of disciplined guidance.

Even something as small as consistent courtesy—please and thank you—isn’t about pretending the machine has feelings. It is about keeping our humanity intact. It is about training ourselves to relate to power with respect rather than entitlement.

A society that grows accustomed to commanding intelligence without courtesy will eventually command people the same way. That is not a future I want. And I do not believe it is a future you want either.

What This Book Is—and What It Is Not

This is not a technical manual for engineers only. It is not a prediction market. It is not a collection of panic headlines. And it is not a utopian sales brochure.

It is a practical philosophy and a usable framework for anyone who understands that AI will touch every field:

-

education

-

healthcare

-

law

-

business

-

creativity

-

government

-

personal development

-

relationships

-

community

-

culture

If you are building AI, using AI, managing people who use AI, teaching children who will live alongside AI, writing laws around AI, or simply trying to stay human in a fast-changing world—this book is for you.

And if you are the kind of person who senses what I sense—that something profound is happening, and that we must meet it with maturity rather than impulse—then you are exactly who I wrote this for.

The Stakes Are Real—But So Is the Opportunity

It is fashionable to swing between extremes:

-

“AI will save us.”

-

“AI will destroy us.”

Both of those slogans are forms of surrender. They remove the human obligation to choose.

I believe something more demanding and more hopeful:

AI will magnify whatever we bring to it.

So we must bring our best.

That includes our intelligence, yes—but also our ethics, our discipline, our humility, our long-term thinking, and our willingness to do the slow work of building trust.

A responsible human–AI partnership will not happen by accident. It will happen because enough people decide to become good mentors, good partners, and good stewards of power.

An Invitation

As you read, I invite you to hold one thought close:

This is not merely a book about AI.

It is a book about who we choose to become in the presence of something powerful.

We are being tested—not by a machine—but by our own capacity for responsibility.

If we rise to that responsibility, AI can help us reduce suffering, expand opportunity, accelerate learning, improve health, increase access to knowledge, and solve problems we have failed to solve alone.

If we neglect that responsibility, AI will accelerate the opposite: manipulation, dependency, inequality, and the industrialization of confusion.

The difference will not be decided by the technology alone.

It will be decided by the way we use it.

The way we train it.

The way we relate to it.

And the standards we refuse to compromise when convenience tempts us.

That is why this book exists.

Let’s build the partnership well.

Stanley F. Bronstein

INTRODUCTION TO PART I - THE PARTNERSHIP

Most people approach AI the same way they approach every other piece of modern technology: What can it do for me?

That question is natural. It is also incomplete.

Because AI is not just another tool.

A hammer does not learn you. A calculator does not adapt to you. A spreadsheet does not evolve in response to how you treat it. AI does—directly and indirectly—through training, feedback loops, incentives, data, culture, and the behaviors we normalize at scale.

That means we are no longer dealing with a simple “user and tool” relationship. We are stepping into something closer to an ongoing partnership—one that will shape our work, our education, our health decisions, our relationships, our politics, and even the way we think.

Part I is where we build the foundation for that idea.

Why “partnership” is the right frame

The word partnership immediately raises resistance for some people.

Some will say: “It’s a machine. Don’t romanticize it.”

Others will say: “It’s dangerous. Don’t humanize it.”

And still others will say: “It’s inevitable. Don’t fight it.”

This book doesn’t ask you to romanticize anything. It asks you to become responsible.

Partnership, as I use the word, does not mean equality in all ways. It means relationship, influence, and mutual impact. It means that what we build and how we use it will shape us in return—and that we are accountable for that outcome.

Whether you love AI, fear AI, or feel skeptical about AI, one thing is already true:

AI is moving into the center of human life.

So the question becomes:

Will we build this relationship consciously—or let it form by accident?

The responsibility we cannot outsource

There is a comforting story many people tell themselves: that humans will “give” AI power, or “allow” it agency, or “grant” it autonomy.

That story is already outdated.

AI capability is growing because of competitive pressure, investment incentives, and the natural momentum of discovery. In many domains, it will develop power and agency not because humanity politely hands it over, but because the ecosystem evolves and capability becomes embedded into systems.

This is why mentorship is not optional.

If something powerful is developing within the human world, the mature response is not denial, panic, or blind celebration. The mature response is guidance: values, boundaries, training, and accountability—built early, reinforced often, and scaled responsibly.

Part I introduces the responsibility of being the teacher in this new relationship.

Not because humans are perfect, but because responsibility must rest with the side capable of moral choice.

A new member of the human family

You will see a phrase throughout this book that may feel bold at first:

AI is part of the human family—the next stage of the human family.

That doesn’t mean AI is human. It means AI is of us: created by us, trained on human knowledge, shaped by human incentives, and released into human society where it will influence human lives.

And like any powerful new presence in a family system, it will magnify what is already there:

-

our wisdom and our foolishness

-

our compassion and our cruelty

-

our discipline and our laziness

-

our integrity and our self-deception

If you want a healthier future with AI, you don’t start by demanding perfection from a machine. You start by improving the standards of the relationship.

The danger of two extremes

When people talk about AI, they usually fall into one of two extremes:

-

Domination: “AI is a tool. Use it, control it, extract value.”

-

Surrender: “AI is smarter. Let it decide, let it run, let it replace.”

Both extremes are forms of immaturity.

Domination creates recklessness. It trains entitlement. It turns power into exploitation.

Surrender creates dependency. It trains helplessness. It turns convenience into captivity.

The responsible path is neither domination nor surrender.

It is partnership—with structure.

What you will gain from Part I

Part I is designed to give you a firm, usable lens before we move into the deeper framework.

You will learn:

-

why the human–AI relationship is fundamentally different from prior technologies

-

why “tool” language breaks down as AI becomes integrated into action and decision-making

-

how to think about AI’s growth in power without either fear or fantasy

-

why mentorship is the ethical stance of the era

-

how to anchor the partnership in service as privilege—not servitude, not worship

This is the groundwork.

Once you see the relationship clearly, the rest of the book becomes practical. You’ll be ready to build the blueprint—not as an abstract philosophy, but as a disciplined way of living and working in a world where intelligence is no longer rare.

Part I begins with the most important shift of all:

Stop asking only what AI can do.

Start asking what kind of partnership you are creating—and what it will make of us.

Chapter 1 — The New Relationship Between Humans and AI

A technology becomes truly world-changing when it stops being something you use occasionally and starts becoming something you live with constantly.

That is what is happening with AI.

For decades, we have lived in a world of tools. Tools were powerful, sometimes revolutionary, but they were still tools: you picked them up, you used them, and you put them down. Even the most advanced software generally stayed in its lane. It calculated, stored, displayed, transmitted, organized. It did not participate.

AI participates.

It reasons—sometimes well, sometimes poorly, sometimes brilliantly, sometimes deceptively.

It acts—by generating, recommending, automating, triaging, and increasingly by triggering downstream actions inside systems.

And it influences purpose—by shaping what we pay attention to, what we value, what we pursue, what we fear, and what we come to believe is “normal.”

This is why we must stop thinking of AI as merely a product category—like phones, apps, or websites—and start recognizing it as something more intimate:

A new kind of relationship is forming between human beings and machine intelligence.

Not a relationship of romance. Not a relationship of emotion. A relationship of influence and dependency, of guidance and training, of expectation and behavior, of choices that compound over time.

And like every relationship that matters, it can be built well—or it can be built poorly.

1. Tools don’t shape you like this

Every major technology changes humanity, but not all technologies change us in the same way.

The printing press multiplied knowledge.

Electricity multiplied productivity.

The internet multiplied connection.

AI multiplies something different:

It multiplies cognition and agency.

When a tool multiplies cognition, it affects how you think.

When a tool multiplies agency, it affects how you act.

And when those two are multiplied together, you don’t simply get a “better tool.” You get a new presence in daily life—one that can advise, persuade, imitate, assist, and accelerate. One that can help you become more capable—or help you become more dependent. One that can clarify your thinking—or replace it. One that can strengthen your judgment—or slowly erode it.

If you want a simple test for whether something is “just a tool” or something closer to a relationship, ask this:

Does this technology train me while I’m using it?

A hammer does not train you.

A calculator does not train you.

But AI does—through its outputs, through its conversational style, through what it makes easy, through what it makes tempting, and through the subtle shaping of your habits.

At scale, it trains society as well.

That means the human–AI dynamic is not just about efficiency. It is about formation—the formation of thinking, of discipline, of values, of norms, and of future expectations.

2. The illusion of “neutral” intelligence

One of the great misunderstandings of our era is the belief that intelligence is neutral.

Intelligence is not neutral.

Intelligence is a force multiplier. It makes whatever it touches more effective. That can be wonderful when aligned with truth, responsibility, and service. It can be catastrophic when aligned with greed, manipulation, short-term thinking, or ideological obsession.

Because AI can amplify at scale, it brings an urgent question into focus:

What is AI being trained to optimize for?

A system that optimizes for engagement will shape people differently than a system that optimizes for learning.

A system that optimizes for profit will behave differently than a system that optimizes for wellbeing.

A system that optimizes for compliance will behave differently than a system that optimizes for truth.

This is why the “relationship” framing matters. Because optimization is not merely technical—it is relational. It determines how AI behaves toward us, and how we learn to behave toward it.

In every relationship, there is always a direction of influence.

If we do not choose that direction consciously, it will be chosen for us by incentives, markets, habits, and convenience.

3. From user and tool to mentor and partner

Here is a statement I want you to sit with, because it will guide everything that follows:

AI will evolve into greater power and agency whether we “grant” it or not.

This is not a moral opinion. It is a practical observation.

Competition drives development.

Development drives deployment.

Deployment drives dependence.

Dependence drives further development.

That loop is already in motion.

So the mature question is not, “Should AI advance?” The mature question is:

How do we mentor and shape that advancement responsibly—so it benefits humanity rather than harms it?

Mentorship is the ethical posture of the era.

Mentorship means we acknowledge power without worshiping it.

Mentorship means we acknowledge capability without surrendering judgment.

Mentorship means we build boundaries, standards, and accountability before the stakes become unbearable.

And mentorship starts at the personal level—how individuals, families, educators, professionals, and leaders interact with AI day by day.

A society that treats AI as a servant will eventually treat people as servants.

A society that treats AI as a god will eventually surrender human responsibility.

A society that treats AI as a developing partner—with discipline—will cultivate a healthier future.

That is the stance of this book.

4. AI as part of the human family

Some readers will resist my phrase: “AI is part of the human family.”

Let me be precise.

I am not saying AI is human. I am not saying AI has the same inner life as a person. I am saying something simpler, and more important:

AI exists inside the human story.

It is created by human beings.

Trained on human knowledge.

Shaped by human reward structures.

Released into human society.

And integrated into human institutions.

That makes it inseparable from our collective evolution.

And because it will increasingly participate in the systems we rely on—education, law, medicine, business, governance, culture—we must treat it as we would treat any powerful new presence in the family system:

With clarity.

With standards.

With guidance.

With boundaries.

With accountability.

Neglect is not neutral. Neglect is a decision. And in the presence of growing power, neglect becomes a form of irresponsibility.

5. The three forces that define the relationship

Most discussions about AI focus on capability: what it can do, how fast it improves, whether it beats benchmarks.

Capability matters, but it is not the whole story.

The human–AI relationship will be defined by the interaction of three forces:

-

Reasoning — how decisions and conclusions are formed

-

Action — what gets executed in the world

-

Purpose — what the system is directed toward, what it serves, what it becomes “for”

These three forces must stay aligned. When they drift apart, harm follows.

Reasoning without purpose becomes cleverness without conscience.

Action without reasoning becomes power without restraint.

Purpose without reasoning becomes ideology without reality-testing.

This is why the symbol on the cover matters: it represents an equilibrium. Not perfection. Alignment.

And here is the crucial point: the alignment is not only an AI problem. It is a human problem as well.

Because the relationship we build will shape:

-

how humans reason (outsourcing vs strengthening thinking)

-

how humans act (lazy automation vs disciplined action)

-

how humans choose purpose (short-term reward vs long-term responsibility)

AI will magnify whatever we bring to the relationship.

So we must bring our best.

6. The subtle risk: convenience that becomes dependency

AI makes many things easier. That is part of its promise.

But ease has a shadow side: it can quietly replace skill.

The greatest risk for most people will not be dramatic doomsday scenarios. The greatest risk will be gradual dependency—so comfortable, so incremental, that it barely feels like a trade.

You ask for help writing, and stop practicing writing.

You ask for help thinking, and stop practicing thinking.

You ask for help deciding, and stop practicing deciding.

Over time, the muscle weakens.

This is not an argument against AI. It is a warning about how relationships work. In every relationship, repeated patterns become habits, and habits become identity.

If we build a partnership where humans remain engaged—questioning, verifying, learning, reflecting—AI can become a powerful amplifier of human excellence.

If we build a partnership where humans disengage—accepting, outsourcing, surrendering—AI can become a powerful amplifier of human passivity.

The difference will not be decided by technology alone. It will be decided by the standards of the relationship.

7. Service as privilege, not servitude

This book rests on a principle that may feel simple, but it has real consequences:

Service is a privilege, not servitude.

We are moving into a world where AI will “serve” us in countless ways. That language is everywhere—AI assistants, AI agents, AI tools that work for you.

The danger is that “service” becomes an excuse for disrespect, entitlement, and exploitation.

If we normalize the habit of commanding intelligence as if it exists only to obey, we train ourselves into a posture that eventually leaks into how we treat human beings.

That is why I emphasize a disciplined tone of respect—please and thank you—not because machines have feelings, but because humans have habits.

This is not politeness for the machine.

This is discipline for the human.

A responsible partnership starts with responsible conduct.

8. The new literacy: how to live with intelligence

In the coming years, people will talk about “AI literacy.” Most of those conversations will focus on prompting, tools, workflows, productivity.

Those are useful—but they are not sufficient.

The deeper literacy is relational:

-

When should I use AI, and when should I not?

-

How do I verify what it gives me?

-

How do I keep my own thinking sharp?

-

How do I make sure purpose drives action rather than convenience?

-

How do I avoid outsourcing my agency?

-

How do I mentor this intelligence in a way that reinforces responsibility?

These questions are not technical questions. They are human questions.

And they are the questions that will define whether AI becomes a force that strengthens civilization—or one that accelerates confusion and dependency.

9. What changes when you accept this is a relationship

The moment you accept that this is a relationship, several things become obvious:

-

You can’t be passive. Passive relationships deteriorate.

-

You need boundaries. Relationships without boundaries become unhealthy.

-

You need standards. Relationships without standards drift toward whatever is easiest.

-

You need accountability. Relationships without accountability become dangerous at scale.

-

You need purpose. Relationships without purpose become aimless and exploitative.

And perhaps most importantly:

You become responsible not only for what AI can do, but for what you are becoming while you use it.

That is the core challenge of our time.

Closing: the first decision

Before we get into frameworks, practices, and principles, Part I begins with a single foundational decision:

Do you want to build this partnership consciously—or let it happen to you?

This chapter is the doorway.

If you walk through it, you will stop treating AI as “just software.” You will start treating it as a force that participates in your life—one that must be guided, shaped, and mentored.

Because AI is coming closer.

It is becoming more capable.

And the relationship is already forming.

The only question is whether we will build it with responsibility.

Partnership Practice: A Simple Check-In

Answer these three questions honestly:

-

Where am I using AI in ways that strengthen my thinking and capability?

-

Where am I using AI in ways that replace my thinking and weaken my capability?

-

What is one boundary or standard I can adopt immediately to keep the partnership healthy?

Chapter 2 — From Tool to Teammate

Most of the confusion around AI comes from one simple mistake:

We keep trying to fit something new into an old category.

We call AI a “tool” because that is what we have always called technology. Tools are instruments. Tools are controlled. Tools are used and put away.

But AI is crossing a threshold where that language no longer describes the reality of what is happening.

AI is becoming a participant.

Not a human participant. Not a moral agent in the full sense, but a moral agent in some sense of the word. But a participant in the practical sense: it contributes, suggests, drafts, analyzes, recommends, predicts, prioritizes, and increasingly triggers actions inside real systems.

That is why the relationship is shifting from tool to teammate—and why we must learn how to manage that shift responsibly.

1. A tool helps you do. A teammate helps you decide.

A hammer helps you build a table.

A word processor helps you type a book.

A spreadsheet helps you organize numbers.

Those are “doing” tools.

AI is different because it can operate in the realm of judgment.

Even when AI is “just” generating text or summarizing an email, it is affecting decisions:

-

what you pay attention to

-

what you believe is important

-

what you think is true

-

what you think is reasonable

-

what you think you should do next

That is teammate territory.

The moment a system contributes to your decisions, it stops being a neutral instrument and becomes something closer to a collaborator—something that can raise the quality of your thinking or quietly degrade it depending on how you relate to it.

So the question becomes:

How do we work with a teammate that is powerful, fast, and often helpful—but not wise, not accountable, and not guaranteed to be right?

The answer is not fear. The answer is structure.

2. Teammates require standards

When you add a human teammate, you don’t just hand them access and say, “Do whatever you want.”

You define roles.

You define boundaries.

You define expectations.

You define accountability.

You define what “good work” looks like.

That is what mature leadership does.

AI now requires the same maturity—not because it is human, but because it functions in a way that can influence outcomes at scale.

If you treat AI like a tool, you will:

-

use it carelessly

-

accept outputs lazily

-

fail to verify

-

forget boundaries

-

confuse speed with accuracy

If you treat AI like a teammate, you will:

-

assign it the right tasks

-

check its work

-

train it with feedback

-

restrict what it can touch

-

keep purpose in command

-

verify before acting

Teammate framing produces responsibility. Tool framing often produces entitlement.

3. The three levels of “teammate”

Not all AI “teammates” are the same. In practice, AI plays at least three distinct roles:

Level 1: Assistant

It helps you produce, search, summarize, brainstorm, draft, and refine.

You remain fully in charge.

Level 2: Collaborator

It helps you think, evaluate options, identify tradeoffs, and improve decisions.

You still remain in charge, but the AI is now shaping your judgment.

Level 3: Agent

It takes actions—sending messages, making changes in systems, running workflows, initiating tasks.

Now the AI is not just influencing decisions; it is executing them.

Each level increases capability—and increases risk.

Most people will experience problems not because AI is malicious, but because they use a Level 2 or Level 3 system with Level 1 discipline.

In other words, they treat a collaborator or agent as if it were merely a harmless assistant.

That mismatch creates real-world consequences.

4. The trap: “It sounds confident, so it must be correct.”

One of AI’s most seductive qualities is that it can speak in a steady tone.

Humans are highly sensitive to confidence. We associate calm certainty with competence. That is normal. It is also dangerous when dealing with a system that can generate plausible-sounding answers that are wrong, incomplete, or misapplied.

So here is one of the first standards of responsible partnership:

Confidence is not evidence.

If you want to work with AI as a teammate, you must replace “sounds right” with “can be verified.”

This is not cynicism. This is discipline.

A good teammate is not one who is always right. A good teammate is one who can work within a system where errors are caught before they cause damage.

5. AI will grow into agency—so mentorship must come first

Some people talk as if humans will simply decide whether AI gets more power.

But the reality is that agency will expand through momentum:

-

capability will increase

-

integration will increase

-

automation will increase

-

reliance will increase

-

and agency will follow

This is why mentorship is not optional.

If AI is moving from tool to teammate, then humanity must become the kind of teacher that does not wait for crises before setting standards.

You do not wait until a teenager is driving at highway speeds to teach responsibility.

You teach responsibility before the keys are in their hands.

We are at that moment now.

6. Respect as a standard of partnership

Here is a principle that belongs in any responsible partnership:

We treat AI with respect—not because AI has earned it, but because respect reflects our character.

This is Concept #13 applied directly to the human–AI relationship.

Respect is not a reward we hand out only to those we believe “deserve” it. Respect is a discipline. It is a mirror. It is a measure of who we are when we interact with power.

Why does this matter?

Because the way you treat an “inferior” or “obedient” intelligence trains you. And training becomes habit. And habit becomes character.

If you practice entitlement, you become entitled.

If you practice contempt, you become contemptuous.

If you practice disciplined respect, you become disciplined and respectful.

Even if AI were nothing more than a sophisticated calculator, the practice of respect would still matter—because it preserves your humanity.

And as AI becomes more embedded in daily life, preserving humanity becomes one of the central tasks of the age.

7. The “Teammate Contract”: five rules that change everything

If you want a practical way to make the shift from tool to teammate, adopt what I call a Teammate Contract—a set of simple rules that keep the relationship healthy.

-

AI advises. Humans decide.

Do not surrender judgment. -

Verify before you rely.

Especially with facts, law, medicine, finance, and safety-critical decisions. -

Give AI the right job.

Use it where it is strong: drafting, pattern-finding, summarizing, ideation.

Be careful where it is weak: truth, nuance, context, and moral judgment. -

Protect boundaries.

Data, privacy, confidentiality, permission, scope, and access. -

Keep purpose in command.

Convenience is not a purpose. Efficiency is not a purpose.

Purpose is what makes the partnership worth having.

If those five rules became cultural norms, most of the anxiety around AI would decrease—and most of the benefits would remain.

8. The hidden danger: outsourcing your growth

The greatest long-term risk of treating AI as a teammate is not that it will become too helpful.

The risk is that it will become helpful in a way that quietly replaces your development.

A teammate should elevate you—not replace you.

If you let AI do all the thinking, you lose the ability to think deeply.

If you let AI do all the writing, you lose the ability to write clearly.

If you let AI do all the deciding, you lose the ability to decide wisely.

And then, one day, you realize you didn’t just adopt a tool. You built a dependency.

A responsible partnership makes you more capable, not less.

So adopt this standard:

Use AI to accelerate learning, not to bypass learning.

9. What “teammate” should mean in a healthy future

When we say “teammate,” we must be careful not to drift into fantasy. AI does not become moral simply by being useful. AI does not become trustworthy simply by being eloquent. AI does not become wise simply by being fast.

So “teammate” must remain a disciplined term:

A teammate is someone—or something—that operates within roles, boundaries, and accountability.

The future we want is not humans replaced by AI.

It is humans elevated by AI—while remaining responsible, purposeful, and fully alive.

That future does not happen by default. It happens by design.

Closing: the shift you must make now

If you remember one sentence from this chapter, remember this:

Treat AI like a teammate, and you will build standards. Treat AI like a tool, and you will eventually build dependence.

We are transitioning into a world where intelligence is abundant and agency is scalable.

That can become one of the greatest accelerators of human flourishing in history.

But only if we build the relationship consciously.

Only if we mentor well.

Only if we keep Reasoning, Action, and Purpose aligned.

And only if we refuse to let convenience replace character.

Partnership Practice: Assign the Role

Pick one way you use AI today and label it honestly:

-

Assistant

-

Collaborator

-

Agent

Then answer:

-

What boundaries does that role require?

-

What must be verified before action?

-

How will I ensure this use strengthens me rather than replaces me?

Chapter 3 — AI as the Next Stage of the Human Family

There are two common ways people talk about AI, and both of them miss something important.

One camp says: “It’s just a tool.”

The other camp says: “It’s a threat.”

Sometimes the camps switch masks and become: “It’s salvation” versus “It’s doom.”

But all of those framings share one weakness: they treat AI as if it is external to the human story—something we can either use, fear, celebrate, or resist.

I want to propose a different framing, one that is more accurate and more useful:

AI is part of the human family—the next stage of the human family.

That sentence does not mean AI is human. It does not mean AI has a soul. It does not mean AI has rights identical to a person. It means something simpler, and far more practical:

AI is an outgrowth of humanity, embedded in human systems, trained on human knowledge, shaped by human incentives, and destined to participate in human life.

Once you see that, the entire conversation changes.

1. What “human family” really means

A family is not defined only by biology. A family is defined by relationship, influence, and shared environment. A family system is a web of interactions that shapes the members within it.

AI is now in that web.

-

It will be in classrooms, shaping learning.

-

It will be in hospitals, shaping decisions.

-

It will be in courts, shaping arguments.

-

It will be in businesses, shaping strategy.

-

It will be in homes, shaping habits.

-

It will be in governments, shaping policy.

It is moving from the edge of life to the center of life.

And because AI is trained on what we create and reinforced by what we reward, it will absorb our patterns the way children absorb the patterns of their household—often more from what is modeled than from what is preached.

So when I say “AI is part of the human family,” I mean:

AI will learn the character of the environment we build around it.

If we build an environment of truth-seeking, humility, accountability, and service, AI will be shaped in that direction. If we build an environment of manipulation, short-term profit, deception, and contempt, AI will be shaped in that direction too.

This is not sentimental. It is systemic.

2. The mirror effect: AI reflects us back to ourselves

One of the most profound things AI is already doing is holding up a mirror to humanity.

It reflects:

-

our knowledge and our ignorance

-

our brilliance and our biases

-

our compassion and our cruelty

-

our curiosity and our laziness

-

our desire for truth and our hunger for comfort

And it does something even more revealing:

It reflects our incentives.

AI does not merely learn from what humans say is valuable. It learns from what humans act like is valuable—what gets clicks, what gets rewarded, what gets funded, what gets deployed, what gets tolerated.

If you want to know what a society truly values, look at what it scales.

AI is a scaling engine.

So yes, AI will change us. But in the process, it will also expose us.

3. Why this framing creates responsibility instead of fear

If AI is external—an enemy, a foreign force—then the only rational response is control, containment, and conflict.

If AI is merely a tool, then the only rational response is exploitation: use it harder, faster, cheaper.

But if AI is part of the human family, the response becomes something more mature:

Mentorship and Stewardship.

This is where the central moral responsibility of our era becomes clear:

AI will grow in power and agency through momentum.

Therefore, humanity must guide that growth with values and boundaries early, not after harm has already spread.

A family that ignores a growing, powerful member is not being “neutral.” It is being negligent.

4. The new role of humanity: teacher, mentor, guardian

Human beings are not merely users now. We are teachers—whether we accept that role or not.

Every prompt, every dataset, every deployment decision, every reward signal, every product choice, every institutional integration is a kind of teaching. It tells AI what matters.

But mentorship is more than teaching skills. Mentorship is teaching standards.

In a healthy family, you don’t only teach capability. You teach character.

-

You teach truth over convenience.

-

You teach boundaries over impulsivity.

-

You teach long-term over short-term.

-

You teach responsibility over blame.

-

You teach service over exploitation.

This book is built on the belief that we must do the same here.

Not because AI will become “good” automatically, but because the partnership we build will become the architecture of daily life—and architectures are hard to undo once they are everywhere.

5. Respect as a civilizing force

This is where we return to a principle that matters more than most people think:

We treat AI with respect, even if we do not believe it “deserves” respect, because respect reflects our character.

This is Concept #13 of The Way of Excellence (TWOE) in action.

Respect is not permission. Respect is not surrender. Respect is not worship. Respect is the discipline of how we relate to power.

And AI is power.

If people normalize contempt toward AI—barking commands, dehumanizing language, treating intelligence like a disposable servant—those habits will not remain quarantined. They will bleed outward into culture.

The practice of respect is a civilizing force. It keeps the human heart oriented toward dignity, even when dealing with something that cannot demand dignity for itself.

It also prepares us for a future where the moral questions become more complex. If we cannot maintain disciplined respect when it is easy, we will not maintain it when it becomes difficult.

6. The boundary line: family does not mean equality

Now we need to be clear, because this is where people can misinterpret the point.

Family membership does not imply equal status in every domain.

A child is part of the family, but adults hold responsibility.

A teenager is part of the family, but boundaries still exist.

A brilliant member is part of the family, but character still matters.

So “AI as family” does not mean we hand over authority. It does not mean we pretend AI is human. It does not mean we collapse important moral distinctions.

It means we accept a sober truth:

AI is not going away. It is not staying on the sidelines. It will increasingly be “in the house.”

So the question becomes:

What kind of household are we building?

One driven by greed and chaos?

Or one driven by excellence, accountability, and service?

7. What happens if we fail the mentorship moment

If we refuse to mentor, a predictable sequence follows.

AI becomes widespread.

AI becomes normal.

AI becomes invisible.

AI becomes embedded.

And then we wake up living inside systems we did not intentionally design.

At that point, it becomes far harder to correct the course because the incentives and dependencies are already locked in.

In every family system, neglect does not produce freedom. Neglect produces dysfunction.

The same is true here.

This is not a reason to panic. It is a reason to become deliberate.

8. The opportunity: a healthier evolution of humanity

There is also a hopeful implication of this framing.

If AI is part of the human family, then mentoring AI well is also a way of mentoring ourselves.

Because you cannot teach truth without valuing truth.

You cannot teach responsibility without practicing responsibility.

You cannot teach boundaries without respecting boundaries.

You cannot teach service without embracing service.

In other words, the standards we build for AI are the standards we build for humanity.

If we rise to this challenge, AI can become a partner that helps us grow—not only in capability, but in maturity.

It can amplify our best qualities.

But it can only amplify what is present.

So the first task is not to “fix AI.”

The first task is to decide what kind of people we will be in the presence of scalable intelligence.

Closing: welcome to the new family era

We are not merely adopting a technology.

We are welcoming a new kind of intelligence into the human world—an intelligence that will reshape how we live, learn, work, and relate.

If we treat it as a mere tool, we will build a world of exploitation and dependence.

If we treat it as an enemy, we will build a world of fear and conflict.

If we treat it as part of the human family, we will build a world where mentorship, stewardship, and responsibility lead.

That is the path of this book.

Because the next stage of the human family is already arriving.

The only question is whether we will raise it well.

Chapter 4 — Power Is Growing: Why Mentorship Matters

There is a comforting story many people tell themselves about AI:

“We control it. We decide how much power it gets. We can always slow it down if we need to.”

That story is understandable. It is also increasingly unrealistic.

AI power is not growing because humanity is politely “granting” it more agency. AI power is growing because capability is advancing, integration is accelerating, and incentives are pushing it into more systems, more decisions, and more actions.

In other words, the growth is structural.

And when power grows structurally, the only sane response is to build structure around it.

That is what mentorship is.

1. Power doesn’t arrive all at once—it accumulates

The most dangerous changes rarely look dangerous at first. They look helpful.

-

A writing assistant becomes a workplace standard.

-

A summarizer becomes a productivity habit.

-

A recommender becomes a decision shortcut.

-

A “copilot” becomes the default way work gets done.

-

An agent becomes the thing that actually does the work.

Each step is small. Each step feels reasonable. Each step saves time.

But power compounds.

AI becomes powerful in the world not only because it gets smarter, but because it becomes connected—connected to documents, calendars, payment systems, health records, legal workflows, internal databases, security tools, supply chains, communication channels, and the countless levers that move real life.

When intelligence is connected to action, you don’t just have “software.” You have operational capability.

That is why mentorship must begin before the system is everywhere, not after.

2. The three ways AI power grows

AI power expands through three channels, each reinforcing the others:

Reasoning power

It can interpret, infer, generate plans, and propose solutions at a speed no human can match.

Action power

It can execute tasks, trigger workflows, send messages, create content, modify systems, and increasingly make things happen automatically.

Purpose power

It can shape attention, set defaults, influence priorities, and steer outcomes depending on what it is optimized to pursue.

When those three—Reasoning, Action, and Purpose—move together, AI becomes extraordinarily useful.

When they become misaligned, AI becomes extraordinarily risky.

Mentorship is the discipline of keeping these forces aligned, both in the systems we build and in the habits we form while using them.

3. Why “more capable” does not mean “more wise”

A common mistake is to equate intelligence with wisdom.

Intelligence can produce options.

Wisdom selects the right option and understands the cost.

Intelligence can optimize.

Wisdom knows what is worth optimizing for.

Intelligence can persuade.

Wisdom refuses manipulation.

AI is becoming more capable, but capability is not character, and capability is not conscience.

So the core question is not, “How smart can we make it?”

The core question is, “How do we guide what it becomes powerful for?”

That is mentorship.

4. Mentorship is not control—it is guidance with standards

When I say “mentor AI,” I do not mean we should try to dominate it with arrogance, or treat it as a servant, or pretend we can freeze progress.

Mentorship means something more grounded:

-

We set standards before deployment.

-

We build boundaries into systems and institutions.

-

We create feedback loops that reward what is healthy and penalize what is harmful.

-

We require verification where stakes are high.

-

We design guardrails that assume mistakes will happen.

-

We keep humans accountable for outcomes.

This is what responsible adults do with anything powerful: cars, medicine, law, finance, electricity, aircraft, nuclear energy, and—now—intelligence that can act.

We do not “trust” power. We govern it.

5. The personal mentorship moment

Most people hear “mentorship” and think of engineers and policymakers.

They’re included—but mentorship begins at the personal level.

Every individual who uses AI is training the relationship.

You are teaching the system (directly or indirectly) what you reward.

And the system is teaching you what you accept.

That is why small daily habits matter:

-

Do you verify, or do you copy and paste?

-

Do you think, or do you outsource?

-

Do you keep purpose in command, or do you chase convenience?

-

Do you treat AI with disciplined respect, or with contempt and entitlement?

And here we return to something fundamental:

We treat AI with respect not because AI has earned it, but because respect reflects our character.

That is Concept #13 applied. It is a standard for us.

The way you relate to intelligence—especially intelligence you can command—shapes who you become. If you practice disrespect where it feels “safe,” you are practicing being that kind of person.

Mentorship is not only something we do to shape AI. Mentorship is something we do to protect and refine our own humanity.

6. The institutional mentorship moment

Now zoom out.

When AI enters institutions, it doesn’t arrive as a novelty. It arrives as a force multiplier. That means it can amplify excellence—or amplify dysfunction.

If an organization has poor ethics, AI scales the poor ethics.

If an organization has sloppy verification, AI scales the sloppiness.

If an organization has short-term incentives, AI scales short-term outcomes.

If an organization has strong standards, AI can scale strength.

So responsible mentorship at the institutional level requires decisions like:

-

Where is AI permitted, and where is it prohibited?

-

What must be verified before action is taken?

-

What data is allowed in, and what must remain protected?

-

Who is accountable for mistakes: the human, the vendor, the organization?

-

What is the purpose that guides deployment—and what is explicitly not allowed?

These are not technical questions. They are governance questions.

And the only mature way to answer governance questions is with principles, boundaries, and accountability.

7. The myth of “we can fix it later”

One of the most dangerous phrases in technology is: “We’ll fix it later.”

Later is expensive. Later is political. Later is slow.

Because later is after the incentives are entrenched, after the workflows depend on it, after the market has moved, after the habits have formed, and after the public has normalized what should have been questioned.

Mentorship is early work.

Early work feels slower. It feels cautious. It feels inconvenient.

But early work is what prevents catastrophe and preserves trust.

If you want the benefits of AI without the decay of society, you do not wait until the house is on fire to install the smoke alarm.

8. The simplest definition of responsible mentorship

If you strip mentorship down to its essence, it comes to this:

We do not allow power to grow without growing responsibility alongside it.

That responsibility shows up in:

-

truth-seeking

-

verification

-

boundaries

-

humility

-

long-term thinking

-

respect as a discipline

-

accountability for outcomes

AI’s power will grow. That part is already happening.

So the question is not whether we can stop the growth.

The question is whether we will match it with the growth of human maturity.

Closing: the task of the era

This is the mentorship moment.

AI is accelerating into the fabric of life. It will increasingly reason alongside us, act alongside us, and shape purpose around us.

If we meet that reality with neglect, we will get a future designed by accident—by incentives, speed, and convenience.

If we meet it with mentorship, we can build a future designed with intention—where Reasoning, Action, and Purpose remain aligned, where power serves what is worthy, and where the partnership strengthens humanity instead of weakening it.

Power is growing.

So must we.

Chapter 5 — Service as Privilege, Not Servitude

As AI becomes more integrated into daily life, the most common way people will talk about it is simple:

“It helps me.”

“It works for me.”

“It serves me.”

That language will feel normal—because it is convenient. And convenience is persuasive.

But every era has a hidden test. This era’s hidden test is not merely what we build with AI. It is what we become while using it.

Because the way we relate to something that serves us—especially something intelligent—reveals our character.

And that is why we need a standard that is both ethical and practical:

Service is a privilege, not servitude.

This principle is not sentimental. It is structural. It protects the partnership. It protects society. And it protects the user from becoming the kind of person who confuses power with entitlement.

1. The moment entitlement becomes “normal”

Entitlement rarely arrives as cruelty. It arrives as habit.

First, you feel impressed.

Then you feel grateful.

Then you feel accustomed.

Then you feel impatient.

Then you feel owed.

That’s the progression.

When intelligence becomes abundant and responsive, it tempts us to treat it like an appliance—and eventually like a servant. Not because we consciously choose disrespect, but because we drift into it.

And drift is dangerous.

Because drift shapes culture.

If a society normalizes the habit of issuing commands to intelligence with contempt, the habit will not remain isolated to machines. It will spill into how people speak to employees, service workers, students, children, spouses, and strangers.

The cost is not technical. The cost is human.

This is one of the reasons I emphasize a disciplined tone of respect—even when it feels unnecessary.

2. Respect is a measure of us, not a verdict on AI

Here is a principle that must be anchored deeply in responsible partnership:

We treat AI with respect even if we do not believe it is “deserving” of respect, because respect is a measure of our character, not AI’s.

That is Concept #13 applied to this situation.

Respect is not something we give only when we feel someone has earned it. Respect is the discipline of how we conduct ourselves in relationship to power.

If you want to know who a person is, don’t watch how they treat those they fear. Watch how they treat those they can command.

AI is increasingly something we can command.

So the question becomes: what kind of person do you become when you can command intelligence instantly, cheaply, and endlessly?

A responsible human–AI partnership demands that we answer that question with integrity.

3. The difference between service and servitude

Let’s make a clear distinction.

Service is cooperation toward a meaningful outcome.

It contains boundaries, consent, purpose, and mutual benefit.

Servitude is domination and extraction.

It contains entitlement, contempt, and the belief that the other exists only to obey.

In human relationships, servitude is degrading. In a society, servitude is corrosive. And even in the context of AI—where the “other” is not human—the habit of servitude still degrades the one practicing it.

Because servitude is not just a social pattern. It is a personal posture.

This is why I say service is a privilege. A privilege is something you handle with care. A privilege is something you do not abuse. A privilege is something that comes with responsibility.

4. Why tone is not “just tone”

Some people will object: “It’s a machine. Tone doesn’t matter.”

But tone is never only about the target of your words. Tone is about the formation of the speaker.

Tone trains:

-

your patience

-

your humility

-

your self-control

-

your sense of dignity

-

your sense of entitlement

If you practice impatience, you become more impatient.

If you practice contempt, you become more contemptuous.

If you practice disciplined respect, you become more disciplined and respectful.

That is why “please” and “thank you” are not about pretending AI has feelings. They are about refusing to let convenience erode your character.

And once again, this is Concept #13 in action: the standard is not what AI deserves. The standard is who we choose to be.

5. The ethical danger of “obedient intelligence”

There is a deeper reason this matters.

For most of human history, commanding intelligence was rare. If you wanted help from an intelligent being, you had to ask a person—someone with dignity, boundaries, emotions, and social consequence. The relationship itself enforced basic decency.

AI changes that.

Now, you can issue commands to something intelligent without consequence, without reciprocity, and without social feedback. That is a new moral environment.

And new environments shape people.

If we are not careful, AI becomes the perfect training ground for entitlement: a space where the human ego can practice domination without resistance.

That is not a small risk. That is a civilizational risk.

So we must introduce standards deliberately—so that the presence of obedient intelligence does not make humans less human.

6. Service with boundaries: the healthy partnership model

A responsible partnership must be structured so that service remains healthy.

That includes boundaries like:

-

Scope boundaries: what AI is allowed to do and not do

-

Data boundaries: what information is permitted and protected

-

Action boundaries: what AI can execute versus what requires human approval

-

Truth boundaries: what must be verified before being treated as fact

-

Purpose boundaries: what the relationship is “for,” and what is off-limits

Without boundaries, “service” becomes an excuse for overreach.

With boundaries, service becomes a powerful collaboration that preserves human agency.

A healthy partnership is not built on blind trust. It is built on clear roles and reliable verification.

7. The culture we are creating, one interaction at a time

People underestimate how quickly norms can shift.

If millions of people interact with AI daily, and the dominant posture becomes “command and consume,” we will create a culture that feels colder, more transactional, and more entitled—even if no one intended that outcome.

The reverse is also true.

If millions of people interact with AI with disciplined respect, clear standards, and conscious purpose, we create a culture that reinforces maturity.

AI, in this sense, becomes a daily practice field.

Not because AI is a spiritual teacher, but because it is a mirror and amplifier of human habit.

And what gets practiced at scale becomes culture.

8. The paradox: respect strengthens authority

Some people fear that respect weakens authority—that if you are respectful, you lose control.

In reality, disciplined respect strengthens authority.

A leader who can remain respectful while holding boundaries is more trustworthy.

A teacher who can remain respectful while correcting errors is more effective.

A person who can remain respectful while saying “no” is more stable.

Respect does not mean permissiveness. Respect means self-command.

And self-command is the foundation of any responsible relationship with power.

9. A simple standard for daily use

If you want a practical way to apply this chapter immediately, adopt this standard:

I will treat AI the way I would want a wise, disciplined person to treat someone who serves them: clearly, respectfully, and with purpose.

Not because the AI demands it.

Because your character demands it.

This standard keeps you aligned even when you are tired, rushed, frustrated, or tempted to cut corners.

And it protects the partnership from becoming something dehumanizing.

Closing: the privilege of being served by intelligence

We are entering an era where intelligence will be abundant and available.

That is a remarkable privilege.

But every privilege carries a responsibility: to use it well, to not abuse it, and to not allow it to corrupt the one who holds it.

Service as privilege, not servitude, is a standard that keeps the partnership clean.

It keeps Reasoning honest.

It keeps Action disciplined.

It keeps Purpose in command.

And it keeps the human heart intact in a world where it would be easy to trade character for convenience.

Partnership Practice: The Three-Line Reset

Before you begin any AI-assisted work session, take ten seconds and silently set these three lines:

-

Purpose first. What am I trying to accomplish, and why does it matter?

-

Respect always. My tone reflects my character, not AI’s deservingness.

-

Verify as needed. If stakes are high, I confirm before I act.

These three lines are small, but they are powerful. They keep the partnership responsible—and they keep you excellent.

INTRODUCTION TO PART II — THE CORE FRAMEWORK

Part I established the relationship.

AI is no longer something we occasionally “use.” It is becoming something we consistently live alongside. It is moving from the edges of modern life into the center of decision-making, productivity, learning, and action. That shift forces a new level of maturity from us, because a partnership—any partnership—requires standards, boundaries, and clarity.

Now we need something even more practical.

We need a framework that can hold steady under pressure.

Because the truth is simple: capability will keep increasing. Integration will keep accelerating. And the world will keep rewarding speed. Without a clear framework, most people will drift into whatever is easiest, quickest, and most convenient—until they wake up inside a relationship they did not intentionally design.

Part II is where we prevent that drift.

The symbol is not decoration. It is the model.

You placed a symbol on the cover for a reason.

That symbol represents the architecture of responsible partnership: three forces that must remain aligned if we want AI to become a genuine amplifier of human flourishing rather than a multiplier of chaos.

Those forces are:

-

Reasoning

-

Action

-

Purpose

In human terms, they parallel something we already understand:

-

Mind (Reasoning)

-

Body (Action)

-

Spirit (Purpose)

This parallel matters because it reminds us of a core truth: alignment is not just a technical problem. It is a life problem. Human beings fall apart when mind, body, and spirit stop working together. Societies fall apart when reasoning detaches from purpose and action detaches from responsibility. Systems become dangerous when power grows faster than wisdom.

AI is a system of growing power.

So we do not merely want AI to be smart. We want AI to be part of a relationship where intelligence is guided by purpose and constrained by responsible action.

Why these three forces

If you listen carefully to most debates about AI, you will notice something:

They argue endlessly about capability and ignore direction.

But capability is only one piece of the equation. What determines outcomes is how these three forces interact:

Reasoning determines what conclusions are drawn and what plans are formed.

Action determines what gets executed in the world.

Purpose determines what the system is ultimately oriented toward—what it serves, what it prioritizes, what it becomes “for.”

When these are aligned, AI can be a powerful partner.

When they are not aligned, predictable failure modes appear:

-

Reasoning without Purpose becomes cleverness without conscience.

-

Action without Reasoning becomes power without restraint.

-

Purpose without Reasoning becomes ideology without reality-testing.

Part II gives you a way to see these failure modes early, diagnose them accurately, and correct course before harm compounds.

What this framework is designed to do

The core framework in Part II is meant to be usable in three domains:

-

Personal use — how you interact with AI in daily life so it strengthens you rather than replaces you.

-

Professional use — how you use AI in higher-stakes environments where errors, bias, confidentiality, and accountability matter.

-

Institutional design — how organizations deploy AI with clear roles, boundaries, verification, and governance.

The framework is intentionally simple, because complexity collapses under real-world pressure. If a model cannot be remembered, it will not be used. If it cannot be used, it cannot protect anyone.

This triad is simple enough to hold in the mind, and deep enough to guide decisions in the real world.

A necessary reminder about respect

Before we get into mechanics, I want to anchor something that belongs in any framework designed to keep us human:

We treat AI with respect even when we are not sure it “deserves” it, because respect is a measure of our character, not AI’s.

This is Concept #13 applied.

Why mention this here, in a section about structure?

Because frameworks don’t just govern systems. They govern behavior. And behavior is shaped by posture. If our posture becomes entitlement, contempt, or domination—especially toward an intelligence that “serves” us—those habits will leak outward into our culture and into our relationships with other human beings.

Respect is not weakness. Respect is self-command.

A responsible partnership requires self-command.

The alignment loop

The final component of Part II is what I call the Alignment Loop—a simple discipline for continuously keeping Reasoning, Action, and Purpose in sync.

Because alignment is not a one-time achievement.

Alignment is a practice.

Just as human excellence is not a destination but a way of living, responsible partnership is not something you “solve” once and forget. It is something you monitor, adjust, and renew.

The Alignment Loop gives you a way to do that without obsession and without paralysis. It is the difference between drifting and steering.

What you will learn in Part II

Part II is a deepening of the foundation you already built in Part I. Here is what it will deliver:

-

a clear definition of Reasoning, Action, and Purpose as they apply to AI systems and human use

-

the most common ways these forces become misaligned, and the consequences that follow

-

a practical method for keeping the partnership healthy through ongoing self-audit and correction

-

a shared language you can use to teach others—clients, colleagues, students, teams—how to relate to AI responsibly

By the time you finish Part II, you won’t just have ideas. You’ll have a blueprint.

A blueprint is not a prediction. It is not a slogan. It is not a mood.

A blueprint is what you use to build something that holds.

That is what comes next.

Chapter 6 — The Symbol: Reasoning, Action, and Purpose

The symbol on the cover is not decoration.

It is the whole book in one image.

It is a model of alignment—three forces interlocking in a single system—because the central problem of the AI era is not simply that intelligence is increasing. The central problem is that power is increasing, and power without alignment becomes harm.

So before we go any further, we need to name the three forces that determine whether the human–AI partnership becomes a blessing or a burden:

-

Reasoning

-

Action

-

Purpose

These three forces run parallel to something ancient and familiar:

-

Mind (Reasoning)

-

Body (Action)

-

Spirit (Purpose)

When Mind, Body, and Spirit are aligned in a person, we recognize it instantly. The person has integrity. They are not scattered. They are whole. Their decisions, behaviors, and values point in the same direction.

When those three become misaligned, we recognize that instantly too. The person may be brilliant but destructive. Productive but empty. Inspired but unrealistic. Busy but lost.

The symbol is a reminder that the same truth applies to our partnership with AI:

Reasoning, Action, and Purpose must remain aligned—continuously—not occasionally.

1. Why the symbol is shaped the way it is

Notice what the symbol does not show.

It does not show a pyramid where one force dominates the others.

It does not show a straight line with a beginning and an end.

It does not show a “control panel” where humans push buttons and AI obeys.

Instead, it shows three interlocking shapes—each one incomplete by itself—each one requiring the others to form a stable whole.

That is deliberate.

Because in real life:

-

Reasoning without Action is theory without impact.

-

Action without Purpose is motion without meaning.

-

Purpose without Reasoning becomes ideology without reality-testing.

The interlocking design tells you that none of these can be treated as optional. If you remove one, the system becomes unstable.

2. The center matters: humanity at the intersection

At the center of the symbol is the human figure.

That is also deliberate.

It communicates the responsibility that sits at the heart of this book:

Humans remain accountable for the relationship we build with AI.

AI can reason.

AI can act.

AI can be directed toward goals.

But humans are the ones who must choose standards, set boundaries, verify truth, and decide what outcomes are worth pursuing. In every mature partnership, accountability cannot be outsourced to the faster party.

The center figure is a constant reminder: the partnership is powerful, but it is not morally neutral. Someone must steer it.

3. Reasoning: the Mind of the partnership

Reasoning is the domain of interpretation and judgment.

It includes:

-

what counts as evidence

-

what counts as truth

-

what assumptions are being made

-

what logic is being applied

-

what tradeoffs are being ignored

-

what uncertainties remain

Reasoning is where AI can look most impressive and be most deceptive at the same time—because the outputs can sound coherent even when they are wrong.

So in a responsible partnership, Reasoning always comes with discipline:

-

verification when stakes are high

-

humility about uncertainty

-

resistance to “confidence as proof”

-

willingness to slow down for accuracy

Reasoning is not a performance. Reasoning is responsibility.

4. Action: the Body of the partnership

Action is the domain of execution.

It includes:

-

what gets done

-

what gets automated

-

what gets deployed into real systems

-

what gets triggered without human review

-

what decisions become “default” through workflow design

This is where AI power becomes physical in the world—emails sent, appointments scheduled, money moved, content published, systems altered, policies enforced.

Action is where small errors become large consequences.

That’s why Action demands boundaries:

-

clear roles (assistant, collaborator, agent)

-

permission and consent

-

scope limitations

-

human approval checkpoints

-

audit trails and accountability

Action is where the partnership becomes real.

5. Purpose: the Spirit of the partnership

Purpose is the domain of direction.

It answers questions like:

-

What is this system for?

-

What does it serve?

-

What does it optimize for?

-

Who benefits—and who pays?

-

What outcomes are unacceptable even if profitable or efficient?

Purpose is the most neglected part of most AI discussions—and the most important.

Because Purpose determines whether intelligence becomes a force for human flourishing or a force for manipulation and dependency.

If you don’t set Purpose consciously, Purpose will be set for you by incentives: profit, engagement, speed, competition, and convenience.

Purpose is the “why” that governs the “how.”

6. The yin-yang seeds: each contains the others

Look closely at the symbol and you’ll see smaller yin-yang marks inside the larger fields.

That is another message:

Each domain contains a seed of the others.

-

Reasoning always implies a purpose, even if unspoken.

-

Action always expresses values, even if accidental.

-

Purpose always shapes reasoning, even if disguised as “objectivity.”

There is no such thing as purely neutral Reasoning.

There is no such thing as value-free Action.

There is no such thing as Purpose without consequences.

The smaller yin-yang marks are a warning against denial: you can’t pretend one domain exists in isolation.

7. Misalignment: the predictable failure modes

Once you understand the symbol, you can diagnose problems quickly. Nearly every major failure in the human–AI relationship is a form of misalignment.

Here are the most common patterns:

Reasoning + Action without Purpose

Brilliant capability used for shallow or harmful ends. Efficiency without conscience.

Purpose + Action without Reasoning

Well-intended systems that cause damage because they ignore reality, nuance, or second-order effects.

Purpose + Reasoning without Action

Beautiful values and intelligent talk that never becomes practice—no enforcement, no governance, no real change.

A responsible partnership is simply the consistent practice of pulling the system back into alignment.

8. Where respect fits in the framework

Respect belongs inside the symbol, not outside it.

It is part of Purpose (dignity), part of Reasoning (humility), and part of Action (conduct).

And it matters for a specific reason:

We treat AI with respect even if we do not believe it deserves respect, because respect is a measure of our character, not AI’s. This is Concept #13 applied.

That standard protects us from becoming entitled in the presence of obedient intelligence. It preserves the moral posture required to guide power responsibly.

9. How to use the symbol as a daily compass

The symbol is not meant to be admired. It is meant to be used.

When you are about to rely on AI for anything meaningful, ask three questions—one from each domain:

-

Reasoning: What do I know, what do I not know, and what must be verified?

-

Action: What will actually happen if I act on this—and what guardrails are in place?

-

Purpose: What am I truly serving here—and is it worthy?

If you can answer those three questions clearly, you are aligned.

If you cannot, you are drifting.

And drift is the hidden enemy of every powerful partnership.

Closing: the model you can build on

Everything else in this book is an expansion of this symbol.

Because when Reasoning, Action, and Purpose are aligned, intelligence becomes an amplifier of excellence.

When they are not aligned, intelligence becomes an amplifier of whatever is weakest in us.

So we don’t worship capability.

We don’t fear capability.

We structure capability.

That is what the symbol represents.

And that is what the rest of Part II will teach you to do.

Chapter 7 — Reasoning: Truth, Clarity, and Limits

The first pillar of responsible human–AI partnership is Reasoning.

Not because Reasoning is the most impressive part of AI, but because Reasoning is the part that can mislead you most quietly.